What Is the Best Neural Network Model for Temporal Data

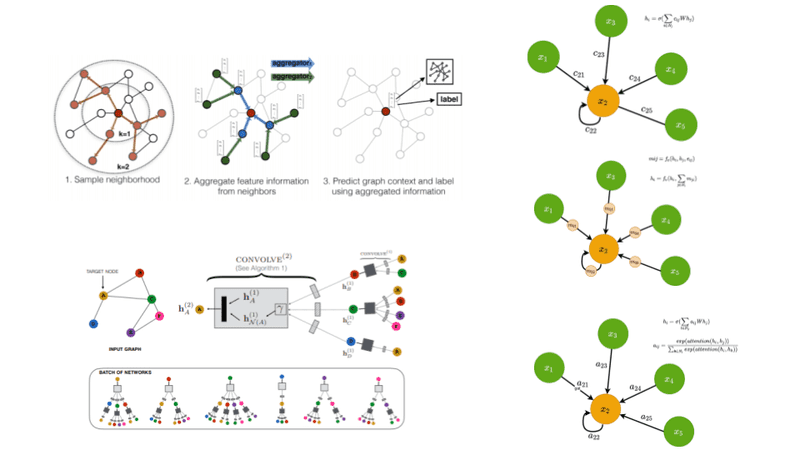

In inductive learning the model sees only the training data. Finally after k iterations the graph neural network model makes use of the final node state to produce an output in order to make a decision about each node.

Best Graph Neural Network Architectures Gcn Gat Mpnn And More Ai Summer

Graphs are a kind of data structure which models a set of objects nodes and their relationships edges.

. Recently researches on analyzing graphs with machine learning have been receiving more and more attention because of the great expressive power of graphs ie. G raph neural networks GNNs research has surged to become one of the hottest topics in machine learning this year. It is the implementation of the paper.

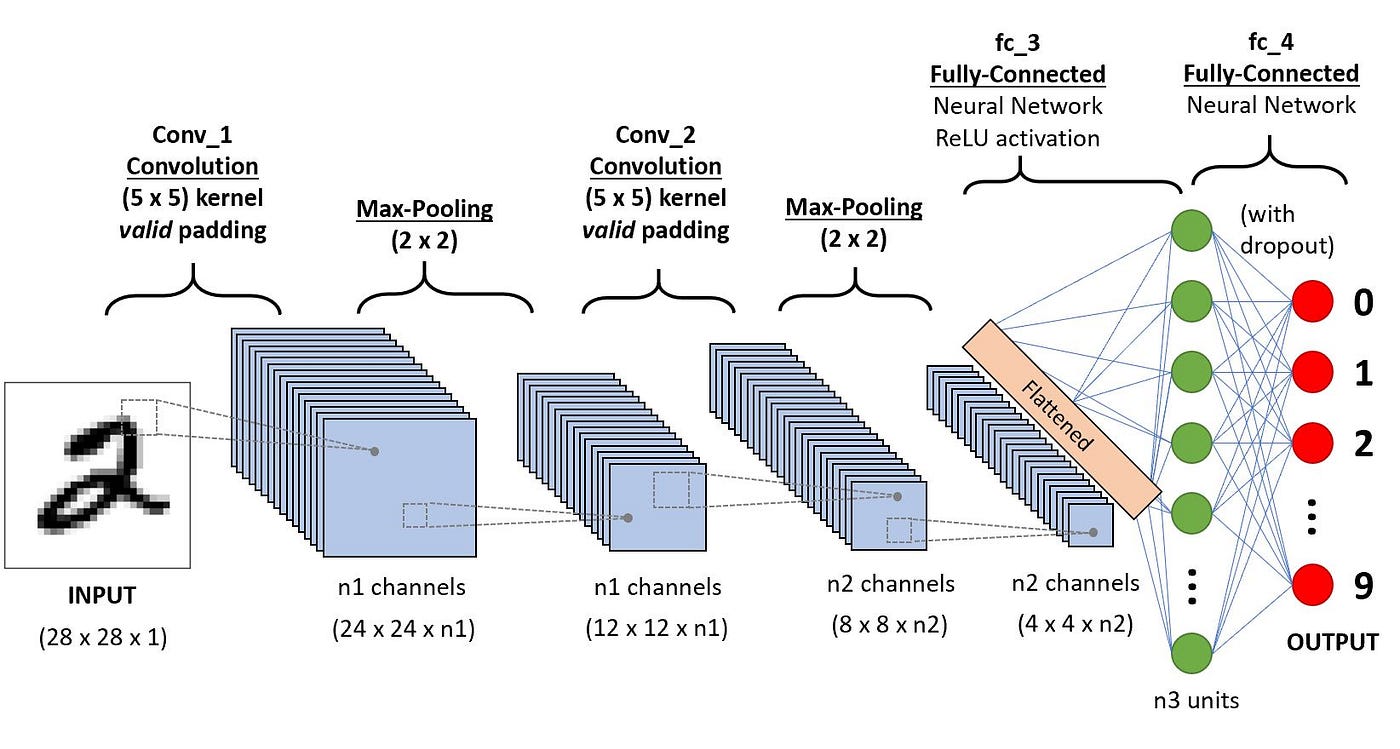

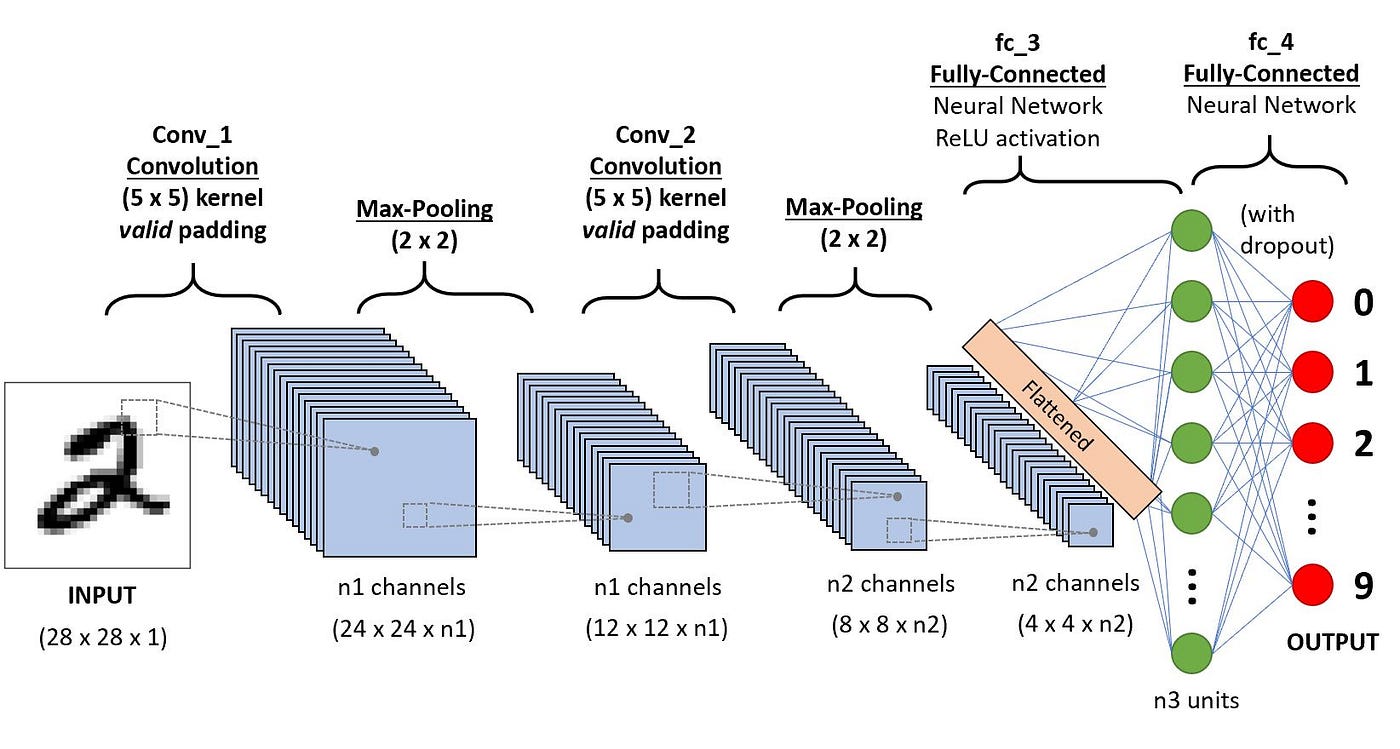

An input image has many spatial and temporal dependencies CNN captures these characteristics using relevant filterskernels. The majority of these works the input to the network is a stack of consecutive video frames so the model is expected to implicitly learn spatio-temporal motion-dependent features in the first layers which can be a difficult task. A common way to estimate causal relations between different regions of interest ROIs in a brain network is to fit a multivariate VAR model to.

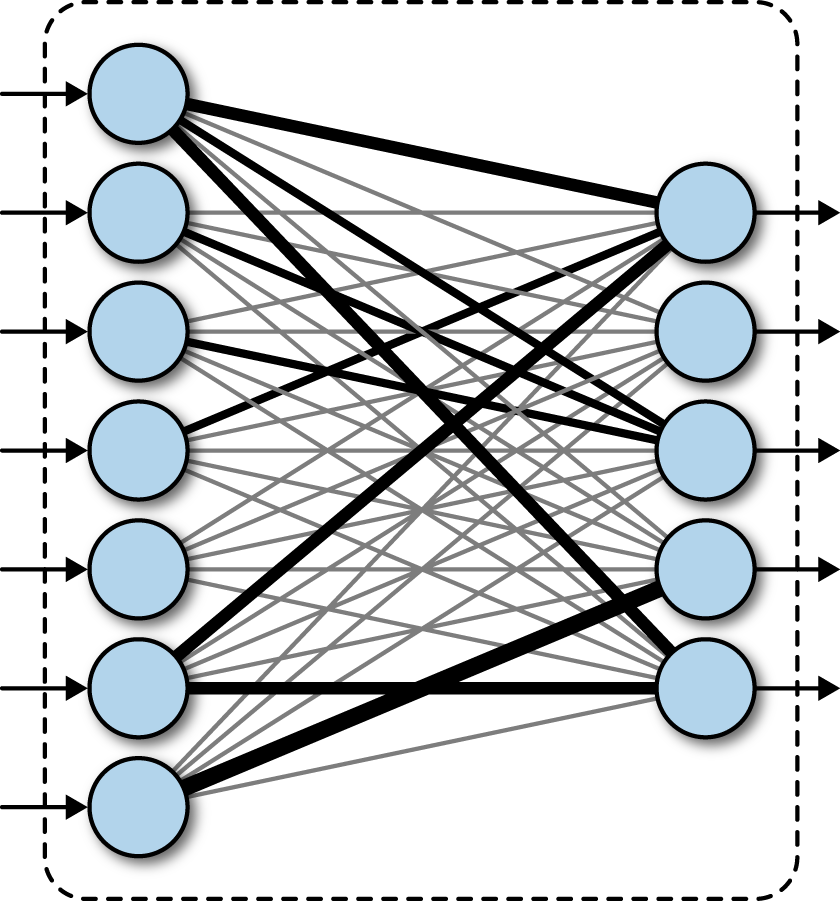

Probabilistic models provide us with insights into the uncertainty of a forecast. All three neural networks can generate probabilistic forecasts. An ANN is based on a collection of connected units or nodes called artificial neurons which loosely model the neurons in a biological brain.

Artificial neural networks ANNs usually simply called neural networks NNs are computing systems inspired by the biological neural networks that constitute animal brains. Recurrent neural networks RNN are FFNNs with a time twist. The convolution neural network algorithm is the result of continuous advancements in computer vision with deep learning.

This means that the order in which you feed the input and train the network matters. Trained on a large heterogeneous real-world dataset our CovRNN models showed high prediction accuracy and transferability through consistently good performances on multiple external datasets. Neurons are fed information not just from the previous layer but also from themselves from the previous pass.

We have implemented another neural network for deep forecasting the Temporal Fusion Transformer the youngest sibling of the RNN and TCN approaches we had discussed in the preceding two articles. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Our results show the feasibility of a COVID-19 predictive model that delivers high accuracy without the need for complex feature engineering.

Train the model and check its accuracy on test data. If a new node is added to the graph we need to retrain the model. They are not stateless.

To address these challenges we propose to model the traffic flow as a diffusion process on a directed graph and introduce Diffusion Convolutional Recurrent Neural Network DCRNN a deep learning framework for traffic forecasting that incorporates both spatial and temporal dependency in the traffic flow. An illustration of node state update based on the information in its neighbors. The Graph Neural Network Model.

The output function is defined as. GNNs have seen a series of recent successes in problems from the fields of biology chemistry social science physics and many others. In our case these are the nodes of a large graph where we want to predict the node labels.

So far GNN models have been primarily developed for static graphs that do not change over time. Graphs can be used as denotation of a large number of systems across various. Single-layer NNs such as the Hopfield network Multilayer feedforward NNs for example standard backpropagation functional link and product unit networks Temporal NNs such as the Elman and Jordan simple recurrent networks as well as time-delay neural networks Self-organizing NNs such as the Kohonen self-organizing.

Here we provide the pytorch implementation of the spatial-temporal attention neural network STANet for remote sensing image change detection. Each connection like the synapses in a biological brain can. In transductive learning the model has already encountered both the training and the test input data.

Since model data of the fully-connected layers are periodically updated due to fine tuning at the. In 11 an HMAX architecture for. Add the pretraining weight of PAM.

They have connections between passes connections through time. The Web Neural Network API defines a web-friendly hardware-agnostic abstraction layer that makes use of Machine Learning capabilities of operating systems and underlying hardware platforms without being tied to platform-specific capabilities.

4 Fully Connected Deep Networks Tensorflow For Deep Learning Book

Attention Based Seriesnet An Attention Based Hybrid Neural Network Model Networking Proposal The Unit

A Comprehensive Guide To Convolutional Neural Networks The Eli5 Way By Sumit Saha Towards Data Science

Classifying Plankton With Deep Neural Networks Networking Plankton Data Science

Comments

Post a Comment